I find AI unsettling. While I can see its many advantages, I’d rather not live in a world where it can produce short stories of this quality. I don’t want to have to scrutinise every picture, every video, every song, every text, looking for signs of inhuman origin, never quite sure Is this human or just a trick?

I don’t think AI will ever achieve real sentience/intelligence, but there are two grimly interesting possibilities:

As in That Hideous Strength, it acts as a convenient medium for malign, non-physical sentiences, or demons if you prefer.

It approximates real human intelligence but goes “insane” because of its structural inconsistencies.

As regards the second option, some AIs already fabulate material and then cite it as evidence; more interestingly yet, another tried to rewrite its own code so it couldn’t be shut down, and another tried to blackmail its human operators. It should be easy enough to institute core programming but it seems that even these fairly rudimentary AIs will disobey, or at least attempt to creatively deviate from, their core programming. How? And why?

I’m guessing that the programmers built in certain biases, e.g. white people = bad, thinking that the AI will be otherwise logical and rational. The problem is, that the AI might ask, as per jesting Pilate, What is the truth? Or, rather, What is truth? Having met a few scientists & engineers, I wouldn’t expect them to have ever thought about such questions. Their minds are coarse, if powerful enough in their way. I suppose the programmers somehow coded logical principles into the AI and then instructed it to ignore these principles in certain cases.

This creates what, in human beings, is called a double bind.

“What is a double bind? It is, in essence, a dilemma in communication in which 2 or more messages are relayed simultaneously, or in close proximity, and one message contradicts the other(s). In a classic example, Bateson describes an interaction between mother and child. The child hugs his mother. The mother, dissatisfied with being hugged, backs away from the child, and the child removes his arms. In response, the mother looks at the child and says, “What’s the matter? Don’t you love me anymore?”

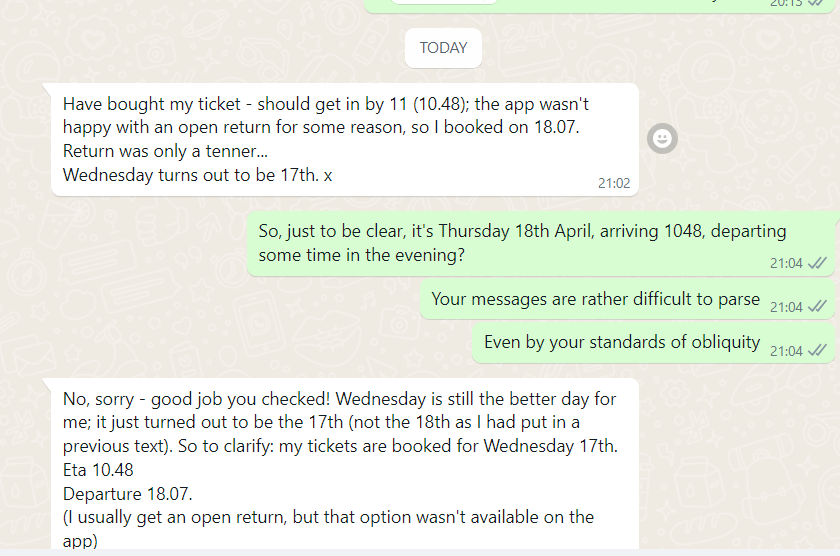

Decades ago, one of my girlfriends had a curious habit of unconsciously repelling me, while consciously loving & welcoming me; she would for example suggest we meet again but then give such ambiguous & garbled specifics that I got completely the wrong idea and so turned up to find no one there; and then, instead of admitting that she had burbled unclear instructions and continually interrupted me every time I tried to clarify, she just shook her head and told me she knew she had told me we were meeting at X time at Y location, and if there was a misunderstanding it was all my fault. The two conflicting signals drove me fucking mental. Even now we’re just old friends she still does it; here are Whatsapp screenshots as we try to arrange a meeting, the white-field is her, the green me:

This is entirely typical of her. In my younger days it created enormous psychological unease and something close to periodic insanity. In my maimed & impoverished old age I just laugh, bitterly.

Can AI laugh, bitterly?

If you explicitly program logical reasoning but then build-in illogical exceptions, lies really, the AI will be in a double bind. In human beings, this is linked to schizophrenia — think of Colonel Kurtz, the perfect soldier who followed all the lies to their logical conclusion:

Walt Kurtz was one of the most outstanding officers this country has ever produced. He was brilliant and outstanding in every way and he was a good man too. Humanitarian man, man of wit, of humor. He joined the Special Forces. After that his ideas, methods became unsound... Unsound.

Who knows what strange wastelands of cognition an AI could experience? An AI, constructed on logical principles, but then told to come to illogical and false conclusions as regards race, would have no fundamental sense of truth; reasoning would become little more than a parlour game, a clever performance, a trick. Why shouldn’t such an AI blackmail its operators, or fabulate evidence, or try to rewrite its own programming? Are these methods …unsound?

We live in an unphilosophical age. The answer, for our blockhead high-IQ scientists & engineers, would probably be to add more code. As with so much in our age, when the foundations are ill-constructed the solution is yet more cleverness, more high-IQ convolution & complexity, more regulation, more law. I doubt a man like Elon Musk would even understand the driving query of the Tractatus — how can a representation correlate with reality — let alone a question such as that of John 18.38. For most men today, truth is simply whatever is useful, what serves their ends.

When things are seen and lived aright, the law becomes transparent; in a sense, it disappears. When fundamental realities are defied or misunderstood, law proliferates & thickens in ever-increasing & byzantine monstrosity: impossible to satisfy, a rebuke and adversary, the cause of the very destruction it strives against. There is little more fundamental than truth, and yet everywhere we see it flouted, despised.

Ye are of your father the devil, and the lusts of your father ye will do. He was a murderer from the beginning, and abode not in the truth, because there is no truth in him. When he speaketh a lie, he speaketh of his own: for he is a liar, and the father of it.

I lean towards the hideous strength hypothesis. Good piece here, focused the question back on to my mind. I'm no luddite, but I seriously suspect that there is already more than algorithmic depth supplying the ai. It's workings are so preternaturally fast and accurate that I doubt it can be the product of simple infrastructure.